Fine-tune a Large Language Model (LLM)

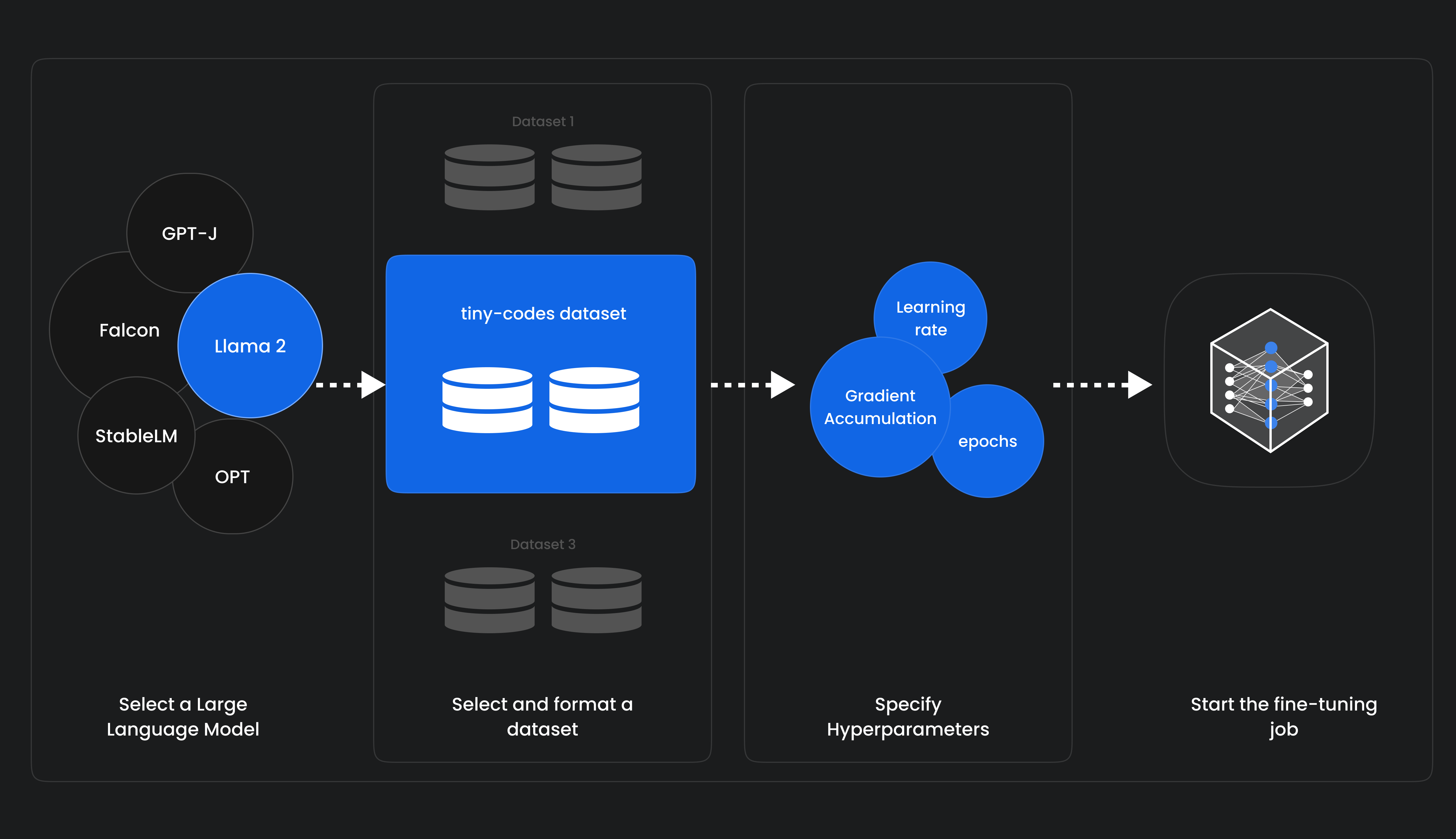

This guide walks you through the process of fine-tuning an LLM on the MonsterAPI Platform. Follow these steps to prepare your data, launch a fine-tuning job, track its progress, and deploy your model.

Welcome to our guide on fine-tuning LLMs. This guide provides detailed walkthroughs to help you launch, track, and understand the billing for fine-tuning Large Language Models (LLMs) on the MonsterAPI platform.

Demo Colab Notebooks with Python Client

Supported Models for Fine-Tuning:

We support over 80 open-source LLMs, offering powerful LLM customization solutions for your needs.

| Model Name | Model Name | Model Name | Model Name | Model Name |

|---|---|---|---|---|

| meta-llama/Meta-Llama-3.1-70B-Instruct | meta-llama/Meta-Llama-3.1-70B | google/gemma-2-27b | google/gemma-2-27b-it | monsterapi/Meta-Llama-3-70B-Instruct_4bit_bnb |

| monsterapi/Meta-Llama-3-70B_4bit_bnb | monsterapi/Llama-2-70b-hf | monsterapi/CodeLlama-70b-hf_4bit_bnb | tiiuae/falcon-40b | mistralai/Mixtral-8x7B-Instruct-v0.1 |

| mistralai/Mixtral-8x7B-v0.1 | codellama/CodeLlama-34b-hf | monsterapi/Mixtral-8x7B-v0.1_4bit_bnb | mistralai/Mistral-7B-Instruct-v0.2 | mistralai/Mistral-7B-v0.3 |

| mistralai/Mistral-7B | meta-llama/Llama-2-7b-chat-hf | meta-llama/Llama-2-7b-hf | codellama/CodeLlama-13b-hf | meta-llama/Llama-2-13b-chat-hf |

| google/gemma-2-9b | google/gemma-2-9b-it | meta-llama/Meta-Llama-3.1-8B-Instruct | meta-llama/Meta-Llama-3.1-8B | HuggingFaceTB/SmolLM-1.7B |

| HuggingFaceTB/SmolLM-1.7B-Instruct | HuggingFaceTB/SmolLM-360M | HuggingFaceTB/SmolLM-360M-Instruct | HuggingFaceTB/SmolLM-135M | HuggingFaceTB/SmolLM-135M-Instruct |

| facebook/opt-350m | apple/OpenELM-1_1B | apple/OpenELM-1_1B-Instruct | TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T | apple/OpenELM-450M-Instruct |

| apple/OpenELM-450M | apple/OpenELM-270M | apple/OpenELM-270M-Instruct | google/codegemma-7b | meta-llama/Llama-2-13b-chat-hf |

| sarvamai/OpenHathi-7B-Hi-v0.1-Base | teknium/OpenHermes-7B | HuggingFaceTB/SmolLM-1.7B | facebook/opt-1.3b | HuggingFaceTB/SmolLM-1.7B |

| Qwen/Qwen2-0.5B | Qwen/Qwen2-0.5B-Instruct | apple/OpenELM-1_1B | facebook/opt-350m | meta-llama/Llama-2-7b-chat-hf |

For getting most up to date list of supported LLMs for fine-tuning, you may query our fine-tuning service contract API.

Click on the above link to learn how to initiate a LLM fine-tuning job on MonsterAPI, from creating a new job and selecting a model to preparing datasets and submitting your job. This guide provides all necessary steps for a successful fine-tuning job launch.

Click on the above link to learn how to prepare your dataset correctly to ensure optimal model performance. Our guide covers the list of supported format and provides examples to help you get started smoothly. Refer to the custom datasets guide for additional information.

Maximum Supported Cutoff Length for Models:

- Models with ≤ 30 billion parameters size: 4096

- Models with > 30 billion parameters size: 512

Monitor the progress of your fine-tuning job, view logs, track metrics using Weights & Biases (if enabled), and download your model weights upon completion.

Understand the cost of fine-tuning jobs, including billing details, per-minute costs, and handling of credits. Ensure an active payment method and subscription to avoid job interruptions.

For questions, reach out to MonsterAPI Support.

Deploy your fine-tuned model easily with Monster Deploy.

After fine-tuning is completed, follow these steps to launch an inference API endpoint:

-

Click on "Deploy model" option from the dropdown on your fine-tuned model's card.

-

Ensure the configuration is correct.

-

Click on "Deploy" button.

This is MonsterAPI's One-click deploy option that launches an inference API endpoint for your fine-tuned model which can be easily queried with the Chat completions OpenAI format endpoint.

Benefits of Monster Deploy:

- Open-Source LLMs: Deploy open-source LLMs as REST API endpoints.

- Fine-tuned LLM Deployment: Deploy LoRA adapters and Inception from the vLLM project for enhanced performance.

- Custom Resource Allocation: Define GPU and RAM settings for efficient deployment.

- Multi-GPU Support: Allocate resources across up to 4 GPUs for handling large models.

Updated 12 months ago