Fine-tune a Large Language Model (LLM)

Welcome to our guide for fine-tuning LLMs! Here you'll find comprehensive walkthroughs that'll assist you with launching, tracking, and understanding the billing process related to finetuning Large Language Models (LLMs) on MonsterAPI Platform.

Demo Colab Notebooks with Python Client

Below, you'll find a list of topics with essential information about each step of the process.

Curated List of Models we support for Finetuning -

| codellama/CodeLlama-13b-hf | codellama/CodeLlama-34b-hf | codellama/CodeLlama-7b-hf | EleutherAI/gpt-j-6b |

| facebook/opt-1.3b | facebook/opt-125m | facebook/opt-2.7b | facebook/opt-350m |

| facebook/opt-6.7b | gpt2 | HuggingFaceH4/zephyr-7b-alpha | HuggingFaceH4/zephyr-7b-beta |

| huggyllama/llama-7b | meta-llama/Llama-2-13b-chat-hf | meta-llama/Llama-2-13b-hf | meta-llama/Llama-2-70b-hf |

| meta-llama/Llama-2-7b-chat-hf | meta-llama/Llama-2-7b-hf | mistralai/Mistral-7B-v0.1 | mistralai/Mixtral-8x7B-v0.1 |

| openlm-research/open_llama_3b | openlm-research/open_llama_7b | Salesforce/xgen-7b-8k-base | sarvamai/OpenHathi-7B-Hi-v0.1-Base |

| stabilityai/stablelm-base-alpha-3b | stabilityai/stablelm-base-alpha-7b | teknium/OpenHermes-7B | tiiuae/falcon-40b |

| tiiuae/falcon-7b | TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T | mistralai/Mixtral-8x7B-Instruct-v0.1 | meta-llama/Llama-3-8B |

| meta-llama/Llama-3-8B-Instruct | meta-llama/Llama-3-70B | meta-llama/Llama-3-70B-Instruct |

-

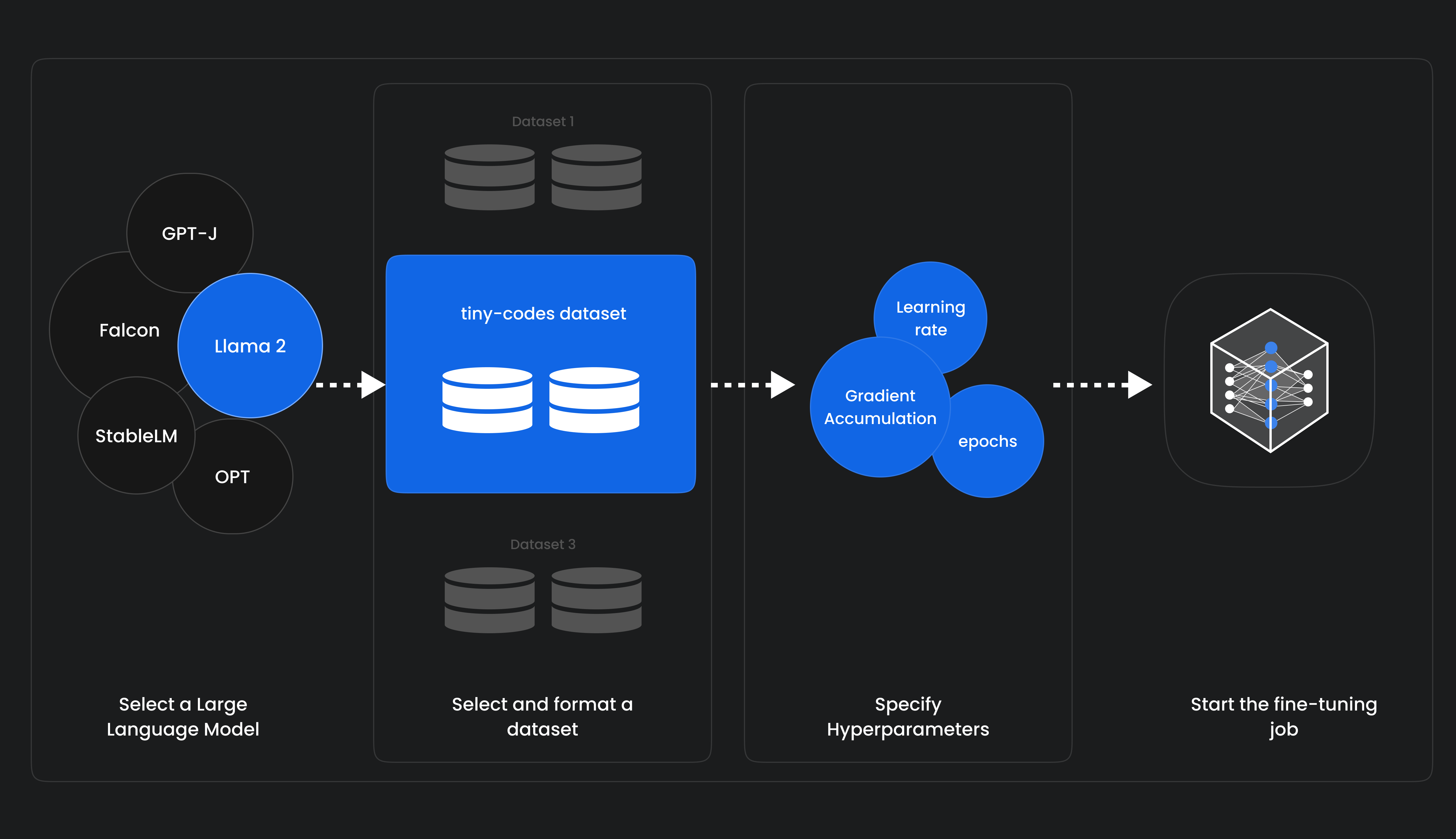

This guide will walk you through step-by-step on how to initiate a fine-tuning job for an LLM. From opening the fine-tuning portal and creating a new job, to choosing a model and dataset preparation, and finally submitting your job. The guide covers all steps in detail, ensuring you have all the tools and information necessary to successfully launch a fine-tuning job.

-

Dataset needs to be prepared in the right format for ensuring that the model learns the right values and parameters. Thus, we provide a text-area while creating a fine-tuning job in which target columns can be specified. Depending on the type of task chosen, you may have to alter the column names. Refer to dataset preparation doc for examples.

You may also refer to our guide on using your own custom datasets for LLM finetuning.

Maximum Supported Cutoff Length for Models:- Models with ≤ 30 billion parameters: 4096

- Models with > 30 billion parameters: 512

-

Once your fine-tuning job is launched, it's essential to monitor its progress. This page helps you understand the different stages a job goes through, from launching to completion. You'll also learn how to view job logs, monitor your job metrics using Weights & Biases (if credentials are provided), and download your fine-tuned model once the job is completed.

-

Understanding the cost associated with fine-tuning jobs is crucial. This page provides detailed information on our billing process, including how jobs are billed, the cost per minute, and what happens if your account runs out of credits. Furthermore, it provides guidance on how to maintain an active payment method and subscription plan to avoid job termination.

Visit each page to get detailed information on each process. If you have any questions or need further assistance, feel free to reach out to our support team.

For any queries, don't hesitate to reach us out at MonsterAPI Support.

Deploying your finetuned model is a straightforward process with MonsterDeploy. Once you've completed the finetuning stage and have your model files ready, follow these steps to deploy your model and start using it through MonsterDeploy's service.

- Access MonsterDeploy Service

Visit the MonsterDeploy documentation at https://developer.monsterapi.ai/docs/monster-deploy-beta and apply for Beta access.

- One-Click Deployment

MonsterDeploy offers a one-click deployment feature. By simply clicking a 'Deploy Model', you can deploy your finetuned model effortlessly. Upon deployment, you'll receive a unique REST API endpoint for querying your model and retrieving results.

- Query Your Model

Utilize the provided REST API endpoint to query your model.

Benefits of MonsterDeploy

- Open-Source LLMs: Easily deploy open-source Large Language Models (LLMs) as REST API endpoints, broadening the range of models at your disposal.

- Finetuned LLM Deployment: Utilize LoRA adapters to deploy finetuned LLMs, ensuring increased throughput with Inception from the vLLM project, enhancing performance during requests.

- Custom Resource Allocation: Optimize resource usage by defining custom GPU and RAM configurations tailored to your specific needs, facilitating efficient model deployment.

- Multi-GPU Support: Benefit from MonsterDeploy's support for resource allocation across up to 4 GPUs, effectively handling large AI models and boosting processing capabilities.

Updated 12 months ago